Log in

Build Your Site

Is Repeat Info on a Website Bad for SEO? Full Explained

While some duplicates may be acceptable. However, excessive repetition may hurt SEO by affecting user experience, crawl efficiency, and content quality signals.

Inside a library, books are neatly organized by subject, author and year of publication. But there's a problem.

Many of them have exactly the same content, and some even have the same title but different covers. You may be confused: which book should I choose? Which book is the most authoritative and valuable? This sense of confusion is also a problem that search engines encounter when dealing with duplicate information.

Duplicate content or repeat information is a much debated topic in SEO. But there can be some misconceptions about this. In the article, we will mainly address this issue and also describe all the issues that need to be considered when addressing duplicate content.

Understanding of Duplicate Content

To simply put, duplicate content is the same text, image or code within the same website or across multiple websites. It also refers to duplicate content that is partly the same, but has a slight difference in the form of expression.

The criteria for determining duplicate content is usually determined by the algorithms of search engines, who may analyze the text, structure, and code of a web page in several dimensions to determine the duplicity of content.

-

Text similarity and content formatting

-

Unique value of content

-

Page structure and code

-

URL duplication

-

Similar meta tags and titles

-

Translated language versions

So, is repeat info on a website bad for SEO?

Here's a cognitive misconception: a website's SEO doesn't get worse with some repetitive information.

In fact, the reason for this is a variety of factors. This is because Google takes into account a number of factors when crawling, indexing and ranking pages.

Hence, we're going to explore things in depth how duplicate content affects SEO.

How Duplicate Content Impacts SEO?

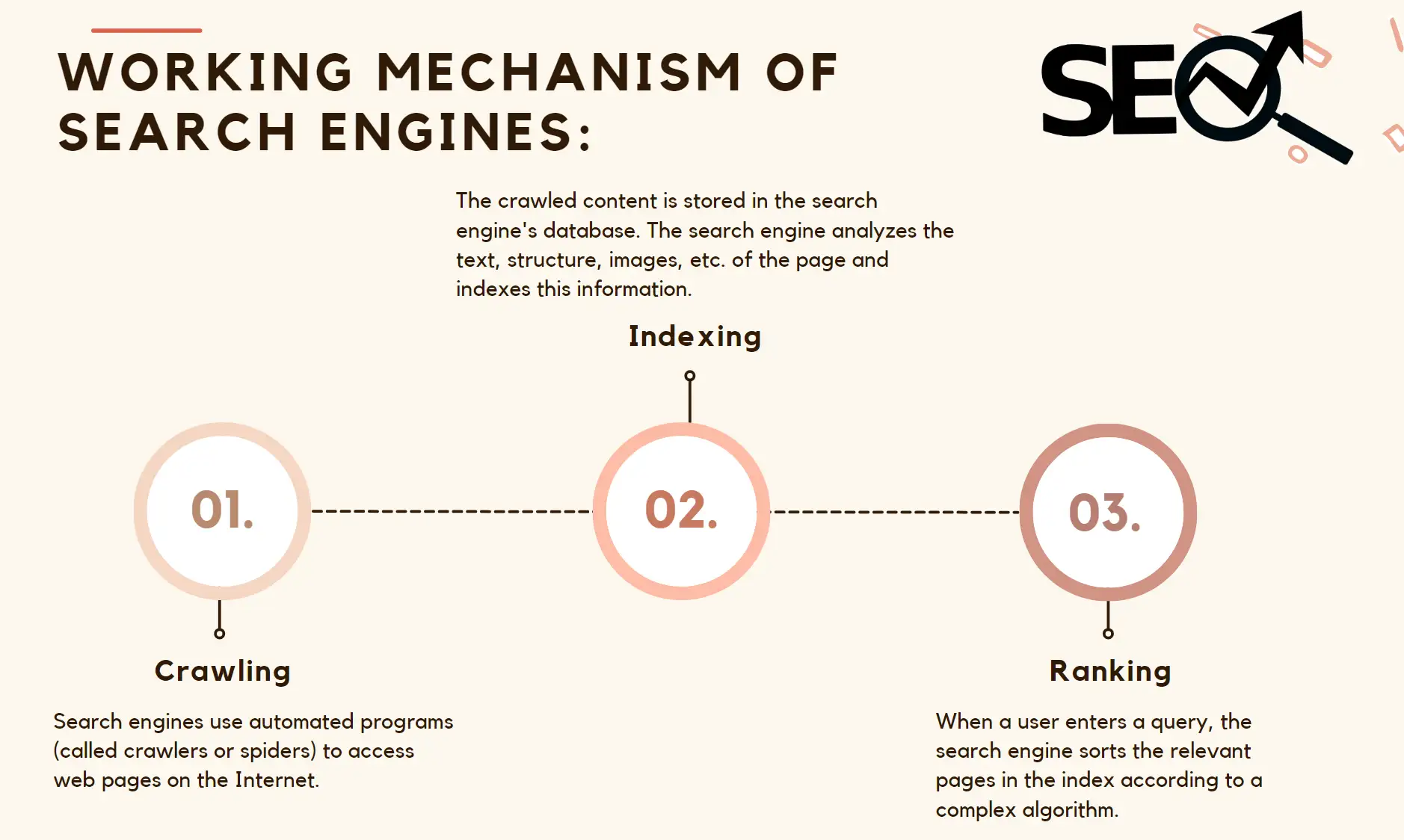

To recognize the impact of duplicate content on SEO, we first need to go back to the original starting point of the working mechanism of search engines to recognize how search engines crawl content.

working mechanism of search engines:

Diluted Page Authority

Page Authority or Page Rank is an indicator used by search engines to measure the authority and relevance of a web page for a particular topic or keyword. The concept was first introduced by Google to determine which pages should be ranked higher in search results.

If multiple pages contain duplicate content, search engines will not be able to accurately determine which page should receive more weight and will ultimately make the page perform poorly on search engine pages.

A few of the main reasons for duplicate content in terms of authority:

-

Multiple URLs pointing to the same content

-

Duplicate or similar content pages

-

External Link Assignment

-

Internal Link Structure

If the same content exists under different URLs (e.g., multiple pages generated through categories, tags, parameterized URLs, etc.), search engines will treat these URLs as different pages. Although the content is the same, the weight will be spread across these pages, resulting in any individual page not being able to focus on getting the SEO weight it deserves.

Overall, the core problem with decentralized weighting is that search engine algorithms can't determine who the optimal page is, so if there's duplicate or similar content on a page, several pages are pulling each other down in terms of weight distribution, thus weakening the overall SEO performance of all pages.

Indexation Issues

We have already described in detail in the previous article that after crawling a web page, search engines analyze the content of the page and store it in a huge database. This process is called indexing, and a page can only be displayed in search results after it has been indexed. If a page fails to pass the indexing stage, it means that it will not be displayed to the user even if it exists.

When crawling, we need to know that there is a thing called "crawl budget", which means that search engine crawlers have a limited number of pages that can be crawled on a website each day. When pages on a website with duplicate content are crawled, the crawler will spend time and resources crawling these similar pages, wasting the crawl budget that should be allocated to high-value, unique content.

Negative UX

For users, clicking on multiple pages but seeing almost the same content is like watching a movie with the same scene over and over again.

Duplicate content must have played a negative role in the satisfaction of website users. Think about always reading the same content; users will relentlessly exit such uninspired websites, resulting in a higher bounce rate and shorter dwell time on the website.

Google's Policy Statement on Duplicate Content

Google says that duplicate content is not going to penalize a website. This is because the search engine's main concern is to provide users with the most relevant and useful results from their searches. Therefore, duplicate content does not directly affect rankings, but duplicate content may affect how content is displayed and indexed. In order to optimize a website's SEO performance, website owners should focus on the uniqueness of their content, make judicious use of canonical tags and redirects, and adhere to search engine best practices to ensure the quality of their website's content and user experience.

How to Avoid Duplicate Content?

Creating Original Content

Users like things that are creative and valuable, and Google follows this preference. We write our website content around keywords, aka user search words. We need to be putting in what the real needs of the audience are. What are their pain points? This is the top priority when writing original content.

Then make sure that the content is high quality and original, only content that is truly readable and insightful. These are the requirements for writing engaging content.

Using Canonical Tags

Because there is a lot of content on the pages of a website, it is inevitable that there will be some duplicate themes, especially on blog pages. When you have similar or duplicate pages, then canonical tags are needed to tell the search engines which version of the page is the main version; use canonical tags on all variants to point to the main version. This helps to consolidate page permissions.

Using 301 Redirects

301 Direction is commonly used by websites as a measure to avoid duplicate content.301 is a type of permanent direction. 301 Direction is an HTTP status code used to tell search engines and users that when they visit a certain page, the server will automatically redirect them to a new page.

If a page or URL has changed, using a 301 redirect ensures that users are automatically redirected to the correct page without encountering a 404 error page, in a way that not only maintains the user's browsing experience, but also transfers the SEO weight from the old page to the new one.

Monitoring Your Content

Remembering that SEO is not just a matter of striving for perfection but a process of constant optimization, it is a good habit to test the functionality and performance of your website on a regular basis.

Not only will you be able to check for duplicate content but also for a variety of other issues related to site performance and user experience.

How to Check Duplicate Content?

Therefore, we can find that finding duplicate content is crucial to keeping your website original and SEO well-performing. We need to identify what duplicate content is, which is a very important task for both website owners and SEO optimizers.

1. Manual Checks

The easiest and most direct way to do this is to copy and paste the content of the article directly into Google's search bar and bring it up in quotation marks. Doing this will the search engine show if the same text on the page appears on other pages. The results page also highlights duplicates in red; these are content issues that need to be revised.

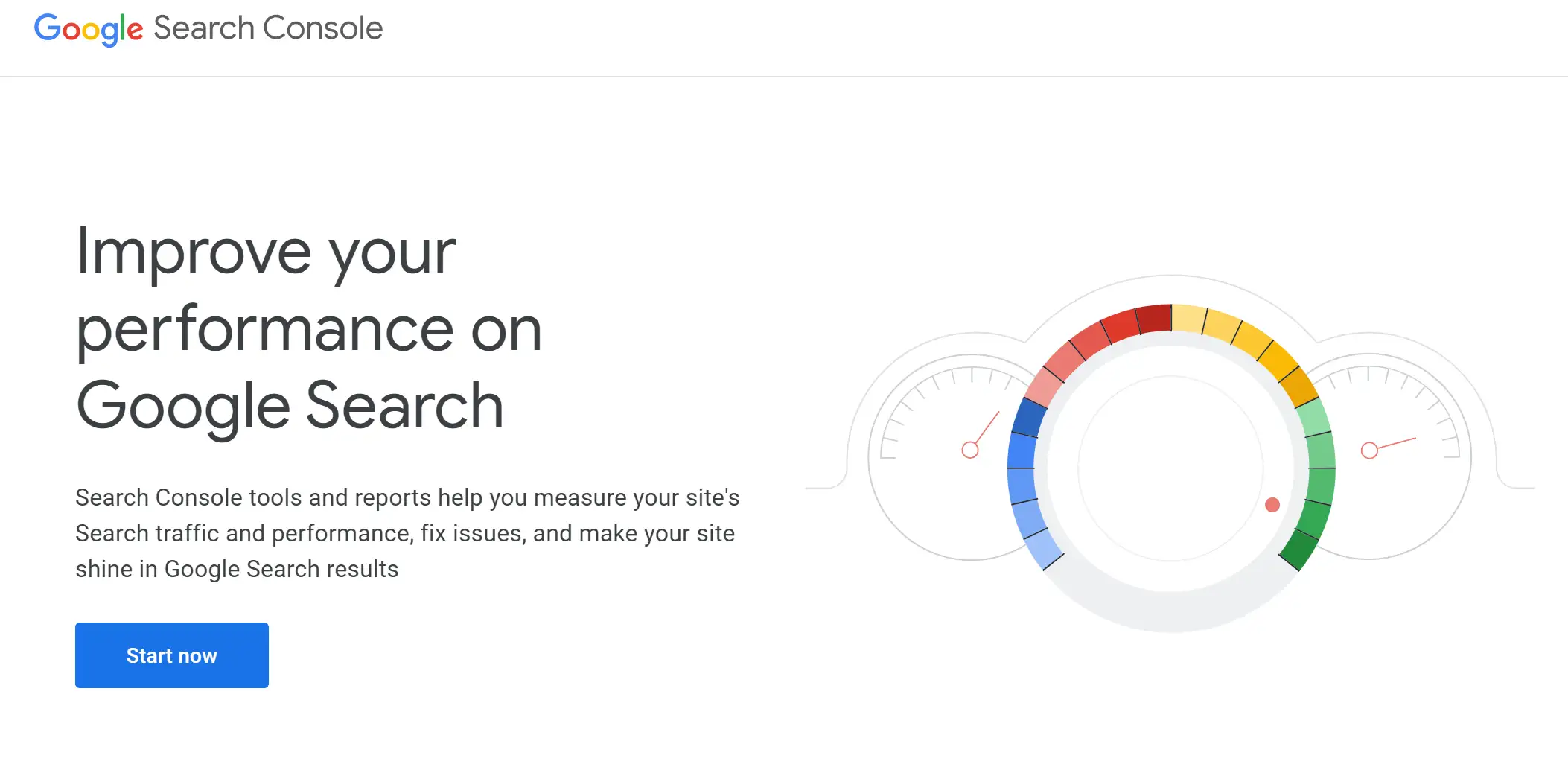

2. Google Search Console

Google has developed Google Search Console to provide reporting on duplicate content issues, primarily to look for "duplicate without user-selected specification" errors in order to identify problematic pages, and then to understand which URLs have already been indexed by Google.

3. Using Online Tools

There are also many online tools now available on the web to help you identify duplicate content on and off your website:

-

Siteliner: This tool works by fully scanning your website for pages with duplicate content on your website and will also show you the number and percentage of duplicate words.

-

Screaming Frog SEO Spider: This tool can be used to crawl small and large websites to find duplicate content issues based on your page's title, meta description and content.

4.Plagiarism Detection Software

There are some online software available as well, such as plagiarism detectors, which can help you find duplicate or similar content on everything on the web.

-

Grammarly: Grammarly not only helps us to correct grammatical problems in our articles but also provides a plagiarism-checking feature that compares your article with a huge database on the web and identifies duplicate content in the article.

-

Scribber: Scribber can check out where the original text is plagiarized and compare the similarity between your own and your own text to be your own content with lower duplicate values.

5. Content Management System

A content management system is a tool that integrates built-in functionality, plug-ins, or third-party tools to detect and manage duplicate content in a variety of ways.

Conclusion

In order for librarians to quickly recommend the most valuable books, libraries need to make sure that the content of each book is unique and original. By the same token, SEO needs to avoid duplicating information and ensure that each page has a unique value in order for search engines to crawl and display your site content efficiently.

Your website can only build a strong and convincing digital presence over time by providing high-quality, user-valued content. However, we don't necessarily have to be afraid of duplicate content. Only by upholding the spirit of content is the king user first, and then your website content will convey real value to the user, which is the best practice of SEO. Even if we encounter duplicate website content, we can use the right tools and strategies to minimize the risk.

Want more information about SEO? Please read on Wegic Blog.

Additional Reading

Written by

Kimmy

Published on

Dec 4, 2024

Share article

Read more

Our latest blog

Webpages in a minute, powered by Wegic!

With Wegic, transform your needs into stunning, functional websites with advanced AI

Free trial with Wegic, build your site in a click!